Sitecore Forms and the Content-Security-Policy (CSP)

The situation

A site is using Sitecore XM/XP and Sitecore Forms and has implemented a Content Security Policy (CSP) header - which is a best practice. However, Sitecore Forms apparently does not really like these policies as they do tend to block stuff. We had a (rather big) content site that had made edits to their CSP - which is manageable in Sitecore so they can adapt it per site - based on a recent penetration test. After this extension (tightening) of the CSP we noticed the forms from Sitecore Forms were not working anymore. To be more precise: the form appeared fine, but the submit action was not working anymore when it included a "Redirect to Page" action.

The error

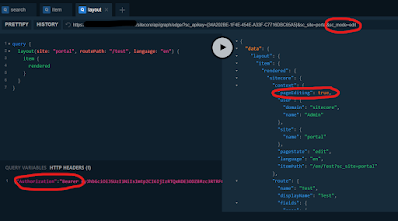

Luckily the error was pretty clear in a browser console:

The CSP header was blocking the script.

The error mentions "Executing inline script violates the following Content Security Policy directive 'script-src ... Either the 'unsafe-inline' keyword, a hash ('sha256-Dcwc6bB3ob8DnpIRKtqhRwu0Wl6bkf7uLnQFk3g6bPQ='), or a nonce ('nonce-...') is required to enable inline execution. The action has been blocked."

The solution

As the code which outputs the inline script is in the Sitecore assemblies, it does not seem an option to add a nonce value. Adding unsafe-inline everywhere is also not a good option as that would lower the quality of the CSP dramatically. So we went for another option and tried to add this unsafe-inline only when there is a form on the page.

Adding unsafe-inline conditionally

First of all we will add an indicator in the HttpContext to tell us whether there is a form on the page. This can be done in Form.cshtml (located in \Views\FormBuilder), which is the main cshtml file of Sitecore Forms. But as we are using SXA it can also be done in the SXA Forms wrapper. This is Sitecore Form Wrapper.cshtml (located in \Views\Shared) and as we already had some customization in this file to add translations (see my previous post on this topic) we added a few lines here:

var context = HttpContext.Current;

context.Items["WeHaveAForm"] = "Y";

You can name the context item whatever you want of course.

Now we need to act on this context item. Again, there are options. We already had some code that placed a CSP in the header based on a value set in Sitecore on the Site item. But if you do not, a generic solution would be to place it in the global_asax Application_EndRequest function.

var context = HttpContext.Current?.Items["WeHaveAForm"];

if (context != null && context.Equals("Y"))

{

var csp = Response.Headers["Content-Security-Policy"];

if (string.IsNullOrEmpty(csp))

{

return;

}

csp = csp.Replace("script-src", "script-src 'unsafe-inline'");

var pattern = @"'nonce-[^']+'";

csp = Regex.Replace(csp, pattern, string.Empty);

Response.Headers.Set("Content-Security-Policy", csp);

}

As you can see we do just a little bit more here:

- We check if the item is present in the context and get out if it is not

- We check if we have a csp value - if not we don't need to do anything so we get out

- We add the 'unsafe-inline' part to the script-src, if it is present in the csp

- We remove the complete nonce if that is present

- We set the new value in the Content-Security-Policy header

It is important to also remove the nonce. When a CSP header includes both a nonce and unsafe-inline, the browser ignores the unsafe-inline for scripts or styles and uses the nonce to allow specific inline elements. So if we keep the nonce, the unsafe-inline addition will not do anything.

Conclusion

We fixed the redirects on the forms without adding unsafe-inline on all pages. I would assume that is the best solution we could find here.